All, We are currently in a phase of compliance with the constraints related to the GDPR; this limits in particular the access to the slurm database. The tool squeue is impacted with this limitation. Users only see information about their jobs when making queries (this is also the case for all other slurm commands, scontrol, sacct...). The sinfo command can still be used to get an overview of the platform usage. The state of the nodes and a filtered output of the squeue command are available on the platform web page https://www.plafrim.fr/jobs-monitoring/ This page is currently being updated to report as much information as possible to the users. We will keep working on it in the coming days and weeks, to hopefully meet everyone's expectations. Please note this address is only available once signed in on the web site (you need to use the WP identifiers obtained at the account creation). Cheers, The PlaFRIM team

Tag: topublish

PlaFRIM discussion team on mattermost

Dear all, A PlaFRIM discussion team is now available on the Inria mattermost server. PlaFRIM users got an an email with the link to join. This team is intended for discussions on any subject related to the use of the platform. Channels can be created for some specific needs. In any cases, here some rules to follow - DO NOT USE any channel to report tickets, sending an email to the technical team plafrim-support AT inria.fr is the only way to submit tickets. - Refrain from having NON-SERIOUS conversations or trolls. For those not having Inria email addresses, an external account must be created for you to access the mattermost server. Please send an email back to nathalie.furmento AT labri.fr if you need such an account. Cheers, The PlaFRIM technical team

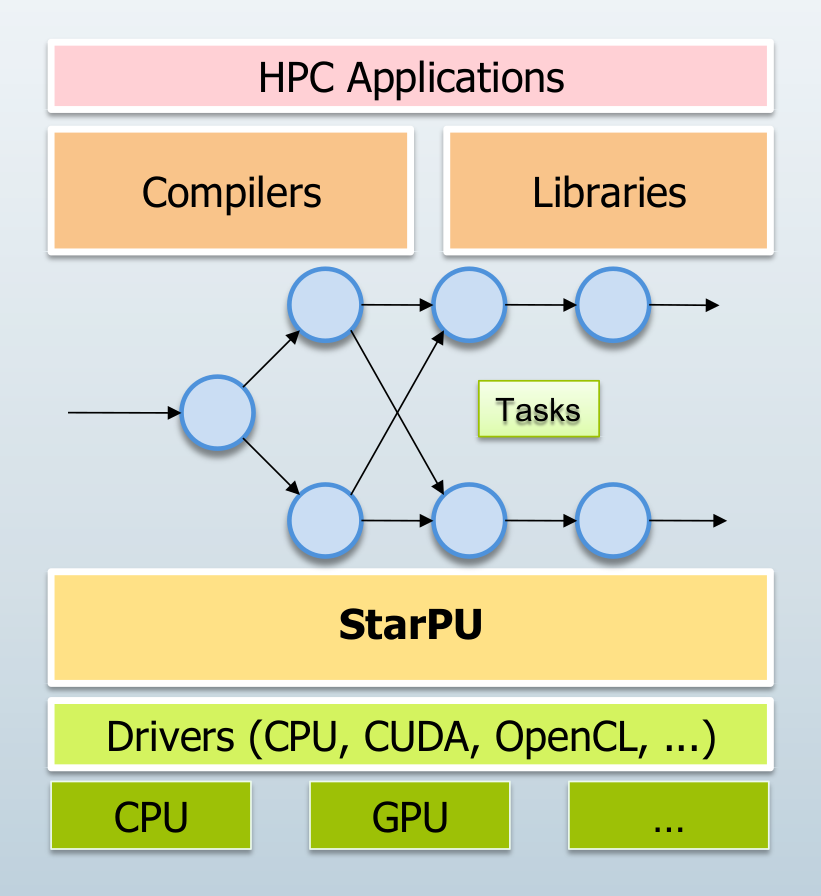

Building A High-Performance Solver Stack on Top of a Runtime System

The teams HiePACS, Storm and Tadaam have been cooperating for more than a decade now, on developing the idea of building numerical solvers on top of parallel runtime systems.

From the precursory static/dynamic scheduling experiments explored in the PhD of Mathieu Faverge defended in 2009 to the full-featured SolverStack suite of numerical solvers running on modern, task-based runtime systems such as StarPU and PaRSEC, this idea of delegating part of the optimization process from solvers to external system as been successful. The communication library NewMadeleine is also part of this HPC software stack.

PlaFRIM has always been a key enabling component of these collaborations. Thanks to its heterogeneous computing units (standard nodes, GPU, Intel KNL, Numa nodes, …), the development and validation of our software stack have been made easier. Multiple collaborations with national and international universities and industrials have also been made thanks to our use of the platform.

Contact : Olivier Aumage oliver.aumage AT inria.fr

Predictive Rendering

To generate photo-realistic images, one needs to simulate the light transport inside a chosen virtual scene observed from a virtual viewpoint (i.e., a virtual camera). A virtual scene is obtained by modelling (or measuring from the real-world) the:

– shapes of the objects and the light sources,

– the materials reflectance and transmittance

– the spectral emittance of the light sources.

Simulating the light transport is done by solving the recursive Rendering Equation. This equation states that the equilibrium radiance (in Wm-2sr-1 per wavelength) leaving a point is the sum of emitted and reflected radiance under a geometric optics approximation.

The Rendering Equation is therefore directly related to the law of conservation of energy.The rendering equation is solved with Monte-Carlo computations In the context of Computer Graphics, a Monte-Carlo sample is a geometric ray carrying radiance along its path, which is stochastically (e.g., using Russian roulette) constructed.

PlaFRIM permits researchers in Computer Graphics to simulate billion of light paths/rays to generate reference images for a given virtual scene.

These data can be used to validate:

– new models that predicts how light is scattered by a material

– new rendering algorithm that are more efficient in terms of variance but also in terms of parallelism.

Indeed, PlaFRIM offers a large palette of computing nodes (CPU, bi-GPU) that permit us to develop, test and validate the whole rendering pipeline.

Contact: Romain Pacanowski romain.pacanowski AT inria.fr

PlaFRIM is back

The shutdown is now over and PlaFRIM is available again.

Closure of PlaFRIM from April 24 to 27, 2020

In order to proceed with the annual total electric shutdown of the building scheduled for 25 April, we have to shut down the PlaFRIM infrastructure from 24 April during the afternoon.

A normal situation is scheduled on Monday 27 April during the morning.

Thank you for your understanding.

Degraded mode

The current health situation forces us to work in degraded mode. We are doing what is necessary to keep PlaFRIM operational as long as the building remains open.

The follow-up and support of the users will probably be done in a degraded way.

Of course, we can always be joined via plafrim-support@inria.fr.

Thank you for your understanding

New parallel storage space

A new parallel BeeGFS file system (see https://www.beegfs.io/content/) is now available on PlaFRIM. You will find a /beegfs/<LOGIN> directory on all PlaFRIM nodes.

This parallel file system replaces the /lustre storage which is no longer under warranty and is not recommended.

The data in this directory are not saved. The quota is 1 TB per user.

If you need to write/read massively and/or in parallel, this is the preferred file system unlike your /home/<LOGIN> directory.

Information on all available storage spaces can be found in the plafrim website’s FAQ (see here).

Please do not hesitate to contact us if you need further information

New AMD nodes available

New AMD compute nodes are now available (see Hardware Documentation/) :

– diablo [01-04]: 2 * AMD EPYC 7452 32-Core Processor and 256 GB RAM

– diablo05 : 2 * AMD EPYC 7702 64-Core Processor and 1 TB RAM

To reserve them, use the “amd” or “diablo” constraints.

Please do not hesitate to contact us if you need further information.