All, We are currently in a phase of compliance with the constraints related to the GDPR; this limits in particular the access to the slurm database. The tool squeue is impacted with this limitation. Users only see information about their jobs when making queries (this is also the case for all other slurm commands, scontrol, sacct...). The sinfo command can still be used to get an overview of the platform usage. The state of the nodes and a filtered output of the squeue command are available on the platform web page https://www.plafrim.fr/jobs-monitoring/ This page is currently being updated to report as much information as possible to the users. We will keep working on it in the coming days and weeks, to hopefully meet everyone's expectations. Please note this address is only available once signed in on the web site (you need to use the WP identifiers obtained at the account creation). Cheers, The PlaFRIM team

Author: François Rue

2020-04-15: GT Infra – PlaFRIM

You will find below a presentation of PlaFRIM which is intended for the working group Infra but also more broadly for all those who wish to have an understanding of the scientific project carried by the platform.

Degraded mode

The current health situation forces us to work in degraded mode. We are doing what is necessary to keep PlaFRIM operational as long as the building remains open.

The follow-up and support of the users will probably be done in a degraded way.

Of course, we can always be joined via plafrim-support@inria.fr.

Thank you for your understanding

Migration

As indicated in the message of November 8th, the migration is ongoing.

Currently, as a reminder:

1. when you connect to PlaFRIM, you arrive on the devel01 or

devel02 front nodes, which are latest generation Skylake machines.

2. you can still connect to the old front node by typing ‘ssh

plafrim2’; this part of the platform (miriel, breeze, mouse, arm01,

sirocco…) will be migrated quickly to the new version of the platform

3. quickly, you will find on PlaFRIM:

* the machines you have been working on until now

* the new bora machines (dual-socket Skylake 36 cores and 192 GB of memory)

* modules with default modules dedicated to the target architecture you are working on

* a /dev space for modules where all users can provide their

own software stacks available to other users; it will be necessary to

redo all previous dev modules, the old ones being no longer functional

for this new version of the platform

* a single slurm partition

(routing) that allows you to address all machines; to choose a

particular category of machines, you can specify the associated

“feature” (to know the “features” associated with a node: sinfo -Nel)

using the -C option of slurm (salloc -C Bora, for example)

* guix to manage your experimental environments

Finally, if at the first connection on this new platform you have a message containing: > ssh-keygen -f “~/.ssh/known_hosts” -R “plafrim-ext”

type the given command and everything should work

PlaFRIM evolutions and calendar

We remind you that from next Tuesday, disruptions are to be expected on PlaFRIM.

When you connect to PlaFRIM, you will be connected to the new nodes available (devel01 and devel02). You will then be able to submit jobs on the new bora001 to bora040 nodes.

As long as PlaFRIM2 nodes are not migrated to PlaFRIM3, you can still connect to the old development nodes (devel11 to devel13) via the “ssh plafrim2” command from the new devel nodes (devel01 or devel02).

The planned schedule remains the same, migrate as many nodes as possible to PlaFRIM3 over the next week.

We still have some configurations to make so that everything is fully operational on PlaFRIM3. Feel free to test it next Tuesday and give us feedback via plafrim-support@inria.fr

Feel free to contact us for any information you may need about this migration.

2017-03-28: Intel KNL Seminar

Tuesday, 28 March 2017, from 2pm to 4pm

Room : Ada Lovelace (Inria Bordeaux 3rd floor)

Programme

- Xeon Phi Architecture Overview and roadmap – Asma Farjallah , HPC Application Engineer (Intel) & Alexandre Chauvin , HPC Account Executive (Intel)

- Evaluation and Usability of the Cache Aware Roofline Model on PlaFRIM Knight Landings – Nicolas Denoyelle (TADaaM)

- Evaluating the impact of Intel KNL memory settings on performance through case studies – Ian Masliah (HiePACS)

- Exploiting multi-level parallelism on Intel KNL – Terry Cojean (STORM)

- Exchanges

Development nodes reboot

The development nodes (devel1[1-3]) will be restarted on Friday 24st March 2017, at noon.

This will allow the updating of these machines.

It will take 01h00

KNL Cluster

A KNL based cluster (Knights Landing (KNL) ) has been installed in February 2017.

The cluster consists of 4 KNL interconnected by OmniPath.

Knights Landing (7230) is a highly configurable architecture. Memory bandwidth is one of the common bottlenecks for performance in computational applications. KNL offers a solution to this problem.

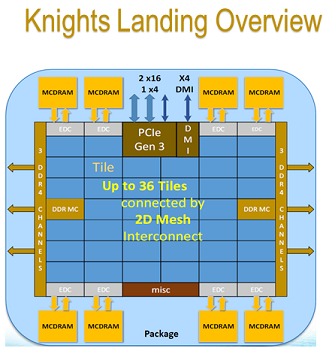

To do so, the 2nd generation of Intel Xeon Phi (KNL) has on-package high-bandwidth memory (HBM) based on the multi-channel dynamic random access memory (MCDRAM). This memory is capable of delivering up to 5x performance (≥400 GB/s) compared to DDR4 memory on the same platform (≥90 GB/s).

The on-package HBM (labeled “MCDRAM” in the figure) resides on the CPU chip, next to the processing cores. KNL may have up to 16 GB of HBM. It’s hyghly configurable. The modes (which can only be modified through the BIOS) are the following:

- cache

- flat

- hybrid

More information is available in this Intel tutorial.

KNL have 64 cores (7230 version) and are organized on a grid as follows:

- 32 tiles of 2 cores each with a L1 cache, and 1 shared L2 cache.

- all shared L2 cache are interconnected by the grid. MESIF protocol is in charge to keep all the caches coherent

- all links are bidirectionnal

- KNL has a distributed tag directory (DTD), organized as a set of per-tile TD (tag directories), which identify the state and the location on the chip of any cache line. It is in the developer’s interests to maintain locality of these messages to achieve the lowest latency and greatest bandwidth of communication with caches. KNL supports the following cache clustering modes:

- all-to-all

- quadrant / hemisphere

- SNC-4 / SNC-2

More information is available in the colfax documentation.

To allow users to test the different configurations, the 4 nodes of the cluster have all been configured with different parameters. Information on the different configurations is available in the PlaFRIM hardware page.